Create a bucket

Log into your console and go to the amazon s3 tool and click

create bucket.

Give it a unique name and click next

For me I am going to leave the defaults here and click next

The default settings are good for me on this page as

well. I want my aws user to have

read/write to this bucket, even though I am not going to use that user to mount

the S3 bucket. I also want to make the

bucket private so “Do not grant public read access” . Click Next

Click Create Bucket!

There is the bucket!

Create a user with credentials

Now I want to create a user with credentials who has

permissions to read/write to this new bucket.

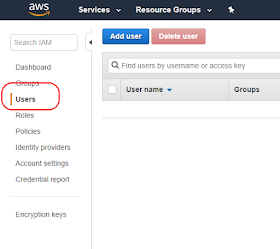

From the AWS console open up the IAM tool and click on users

Click add user.

Enter in a user name, select Programmatic Access and click

Next Permissions.

Select Attach existing policies and search for s3.

Click on AmazonS3FullAccess and click on JSON

This JSON policy

{

"Version":

"2012-10-17",

"Statement":

[

{

"Effect": "Allow",

"Action": "s3:*",

"Resource": "*"

}

]

}

|

Is close to what I want.

It gives read/write access, but to all my s3 buckets. I want to tweak this slightly to limit it to

a single bucket.

Here is a policy that will do what we want.

{

"Version":

"2012-10-17",

"Statement":

[

{

"Effect": "Allow",

"Action": "s3:ListBucket",

"Resource": ["arn:aws:s3:::a-test-bucket-124568d"]

},

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:DeleteObject"

],

"Resource": ["arn:aws:s3:::a-test-bucket-124568d/*"]

}

]

}

|

Click on Create Policy

Click on JSON and paste the policy in.

Click Review Policy

Give it a name and click Create Policy

The policy has been created!

From the create user page select “Customer Managed” from the

pull down menu.

Then click Refresh

Select the policy you just made and click Next: Review

Click Create User.

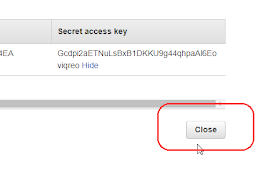

Record your Access Key ID and show your secret access key.

In my case

Access Key: AKIAJETFXNV4NYVV64EA

Secret: Gcdpi2aETNuLsBxB1DKKU9g44qhpaAl6Eoviqreo

(don’t worry I am deleting this bucket and user after

writing this how to)

Click Close you are done with this part.

Install s3fs

On Ubuntu 16.04 install the following tools

> sudo apt-get

install automake autotools-dev \

fuse g++ git libcurl4-gnutls-dev libfuse-dev \

libssl-dev libxml2-dev make pkg-config

|

Download the s3fs tool from github https://github.com/s3fs-fuse/s3fs-fuse

[1] and run the following to install it.

> git clone

https://github.com/s3fs-fuse/s3fs-fuse.git

> cd s3fs-fuse

> ./autogen.sh

> ./configure

> make

> sudo make

install

|

Create the /etc/passwd-s3fs file

> sudo vi

/etc/passwd-s3fs

|

And place the ACCESS KEY ID : Secret Access KEY ID

So in my case I would put

AKIAJETFXNV4NYVV64EA:Gcdpi2aETNuLsBxB1DKKU9g44qhpaAl6Eoviqreo

|

Save it now chmod it to 640

> sudo chmod

640 /etc/passwd-s3fs

|

Mount it!

Create a mount point and mount it!

> sudo mkdir

-p /s3/bucket-test

> sudo s3fs -o

allow_other a-test-bucket-124568d /s3/bucket-test

|

Its mounted now let me write a file to it.

Let me use /dev/urandom to create a 100MiB file with random

data in it in a /tmp folder

> cd /tmp

> dd if=/dev/urandom

of=random.txt count=1048576 bs=100

|

Now copy it over to the s3 bucket

> cp

/tmp/random.txt /s3/bucket-test/

|

Which fails because only root can write to it at the moment…

Let me use sudo

> sudo cp

/tmp/random.txt /s3/bucket-test/

|

OK that worked…

But how do I copy files over if I am not root?

I think I have an issue with /etc/fuse.conf file…

> sudo vi

/etc/fuse.conf

|

Uncomment out the user_allow_other line

Unmount the s3 drive and remount it.

> sudo umount

/s3/bucket-test

> sudo s3fs -o

allow_other a-test-bucket-124568d /s3/bucket-test

|

Now try and copy a file over.

> cp

/tmp/random.txt /s3/bucket-test/random2.txt

|

Worked

Mount using /etc/fstab

First let me unmount the s3 bucket

> sudo umount

/s3/bucket-test

|

Open and edit /etc/fstab

> sudo vi

/etc/fstab

|

And append the following line to the bottom of the file

s3fs#a-test-bucket-124568d

/s3/bucket-test fuse retries=5,allow_other,url=https://s3.amazonaws.com 0 0

|

Here you can see the bucket and the mount point.

Now mount it

> sudo mount

/s3/bucket-test

|

Run a quick test

> cp

/tmp/random.txt /s3/bucket-test/random3.txt

|

That worked!

Also I do not think I need the user_allow_other in the

fuse.conf file

> sudo vi

/etc/fuse.conf

|

Comment out the

user_allow_other line

I am just going to reboot at this point and see if it works

with the new fuse.conf file and also if it automounts

> sudo reboot

now

|

Wahoo it is all working J

So that’s how you mount an S3 bucket as a drive in Ubuntu

16.04

References

[1] s3fs github repo

This was very helpful to me. Thank you!

ReplyDeleteCan you do a tutorial on how to do this on a CentOS based machine?

I am running into some dependency issues trying to do this on a RHEL based EC2 instance.

for CentOS

Deletehttps://cloudkul.com/blog/mounting-s3-bucket-linux-ec2-instance/

for centOS

ReplyDeletehttps://cloudkul.com/blog/mounting-s3-bucket-linux-ec2-instance/

when I tried to mount it returns with no error but mounting did not happen. can you advice what would be the issue? I am trying to mount it on ubuntu 18.02 instance

ReplyDeleteI had the same issue, the _netdev mount option solved it. Perhaps this is worth a try ?

DeleteThis was an awesome how-to! Thank you!

ReplyDeleteThank you for this one ! I just connected my Wasabi S3 Storage. Works in the same way, just the URL is different.

ReplyDeleteExample for an s3fs command:

sudo s3fs bucket mountpoint -o passwd_file=/etc/passwd-s3fs -o url=https://s3.wasabisys.com

-o allow_other

It still have some issues (auto mount upon reboot does not work yet - no idea why) though - but will proceed with fingers crossed.

... and now even the mount problems are gone: the _netdev mount option solved the problem.

DeleteThis was super helpful to me! Thank you!!!

ReplyDeletegreat tutorial, worked perfectly.

ReplyDeleteGreat blog thanks for sharing Leaders in the branding business - Adhuntt Media is now creating a buzz among marketing circles in Chennai. Global standard content creation, SEO and Web Development are the pillars of our brand building tactics. Through smart strategies and customer analysis, we can find the perfect audience following for you right now through Facebook and Instagram marketing.

ReplyDeletedigital marketing company in chennai

seo service in chennai

web designing company in chennai

social media marketing company in chennai

If only it were that simple: archaeology involves collaboration and working with teams, and every excavation is a project, so management skills are critical to success. nice page

ReplyDeleteAi & Artificial Intelligence Course in Chennai

PHP Training in Chennai

Ethical Hacking Course in Chennai Blue Prism Training in Chennai

UiPath Training in Chennai

Thanks for your extraordinary blog. Your idea for this was so brilliant. This would provide people with an excellent tally resource from someone who has experienced such issues.

ReplyDeleteAWS training in Chennai

AWS Online Training in Chennai

AWS training in Bangalore

AWS training in Hyderabad

AWS training in Coimbatore

AWS training

AWS online training

thank you for sharing we are looking forward for more

ReplyDeletehadoop Training in Hyderabad

you have executed an uproarious undertaking upon this text. Its completely change and very subjective. you have even figured out how to make it discernible and simple to make a get accord of into. you have a couple of definite composing dexterity. much appreciated likewise a lot. Movavi Video Editor Serial Key

ReplyDeleteI go to your blog every now and again and counsel it to the total of people who wanted to highlight on happening their comprehension resulting to ease. The way of composing is perfect and in addition to the substance material is highest point score. gratitude for that insight you give the perusers! Stellar Data Recovery Crack

ReplyDelete

ReplyDeleteI was hundreds of miles away on your special day. I hope you enjoyed your day with a big cake. What To Say In A Belated Birthday Card